The real way to assess both person’s workload and productivity is to analyze his email and get ratio of incoming emails overall divided by emails containing reminding clauses such as ‘kind reminder’, ‘is it done yet’, ‘what is the status’ etc. The higher the better. works best with remote environments where emails is the primary communication medium.

productivity = overall emails / reminders

Examples:

- Bob received 30 emails, out of them 5 are reminders. Bob’s workload is 30, productivity is 30/5 = 6

- Jon received 75 emails, 25 reminders. Jon’s workload is 75, productivity is 75/25 = 3

- Satish received 30 emails, just 3 reminders. 30/3 = 10.

- DemiGod got 45 emails and just 1 reminder, 45/1 = 45

So if you sort people lowest to highest, you may see the delayers right away. then you glance on their workload. if the workload is low as well as productivity is, the person could be laid off instantly with no required replacement. High workload, low productivity – time to duplicate the position. High workload and high productivity could be promotion material.

Some processes have a long cycle of approvals which are delaying the final task execution. The delay is disturbing, while some people might miss an approval which restarts the process from the start. It complicates and delays execution dramatically.

The wrong part is that an approver has no any accountability on giving or not giving an approval.

The correct way to collect approvals is approvals by default. If an approver or substitute fails to review a task and give the approval within a pre-agreed period of time (1 BD is fine for most of the cases), the task is approved by default and the process moves forward. If any damage caused by giving approval by default, the approver who failed to review a task, assumes full accountability for the damage.

This way forces every an approver to be personally interested in giving the approval while keeping process execution quick.

In my experience I was working in a IT support company with a team dedicated to a project and a team shared among projects. Being a part of a dedicated team is simple. Same goal, shared job among everyone. Team spirit and dedication. Possibly one lead/manager.

Shared teams are worse. Shared teams introduce additional complications to be managed

- Each person should learn multiple project’s contractual scope of work

- Since projects are never identical, subsets of technical expertise for each employee multiply with every added project

- Vacation, leave and sick planning becomes messy with above 2 complications, because each expertise subset must be shared among at least 3 employees in order to ensure the service is going to be covered during on expert’s absence.

- Different projects are mostly different customers with their own unique tools for ITSM/systems administration. Each member must know how to use all the tools for each project

- Different project follow different subsets of business processes and different escalation/responsibility charts.

- KPI Reporting multiplies with amount projects in place

- Tasks prioritization is tough. Imagine having two highest priority incidents for a different customers during a scheduled change for a third one. It creates Interruptions. Support engineer jumps from one task to another often without having previous task being completed. Too often.

- Management multiplication. Each project tends to have a separate delivery/project/account/name_it manager, who is interested in his project KPIs achievement and destroys a shared team’s dedication.

- Capacity management. Estimating and measuring each team member’s effort dedication to each project is complicated.

Therefore I’m suggesting a universal measure of measuring complexity of a shared team member’s life. The logic behind is not to measure resources, but to measure complications level of a team. Each complication in my logic adds 10% work overhead for each project. I didn’t introduce 0.1 constant, because the coefficient may vary from complication to complication and might be more severe or less severe depending on circumstances. Complications add to each other creating additional workload and than shared among the team members.

The formula below shouldn’t be considered as precise or scientific measure of any kind.

The idea is to get a complication multiplier ($complexity) which indicates a fraction of complexity overhead for the team. The complexity multiplier can be used for deriving additional amount of workforce required to manage all the project in the team.

We may assume $complexity values for 0 to 1 stand for healthy teams, 1 to 1.25 stand for slightly overloaded teams, above 1.25% for doomed overshared teams :).

The formula in a Perl code

$proj_amount = 6; #sample value

$team_members = 3; #sample value

$proj_sow = 0.1 * $proj_amount;

$proj_expert = 0.1 * $proj_amount;

$team_expert = 0.1 * $proj_amount;

$proj_tools = 0.1 * $proj_amount;

$proj_proc = 0.1 * $proj_amount;

$proj_KPI = 0.1 * $proj_amount;

$team_tasks = 0.1 * $proj_amount;

$team_mgmt = 0.1 * $proj_amount;

$team_capct = 0.1 * $proj_amount;

$complexity = ($proj_sow + $proj_expert + $team_expert + $proj_tools + $proj_proc + $proj_KPI + $team_tasks + $team_mgmt + $team_capct) / $team_members;

printf "Project complexity: $complexity \n";

printf "Team members required: $team_members * $complexity = ";

print $complexity*$team_members;

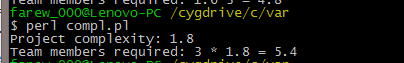

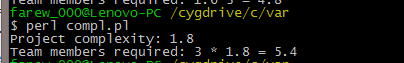

Sample run