I am obsessed with performance. Any bottleneck I encounter is a personal insult. CPU performance gains are very minimal today, GPUs are still growing well, I/O advances quite well too. This one is about I/O.

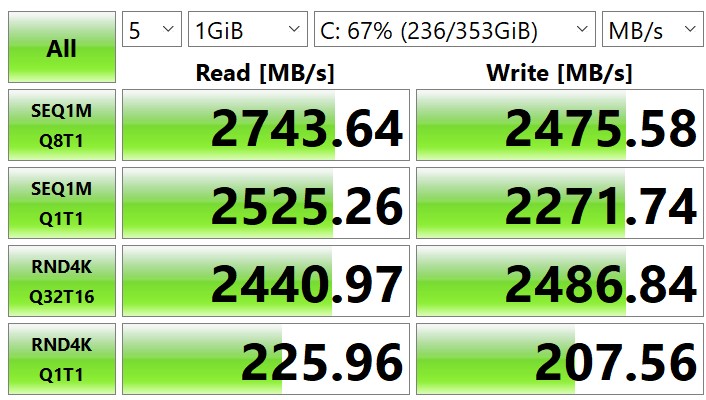

So I have indulged myself with creating Raid0 out of 2x 970 Evo Plus on DMI bus. It works but not as fast as expected. Windows boot time seemed slow, while other tasks seemed OK. Except I knew it could be faster with my Asus ROG Maximus XI extreme DIMM.2 module. Maybe. So I tried to make it faster. I started with the following benchmark results

The numbers are high but not as high as I wanted them to be.

- There no any RND4k gain from RAID 0 compared to a single 970 EVO PLUS

- Entire raid performance is limited by DMI 4000MB/s maximum speed.

Attempt 1. Moving OS from RAID 0 on DMI to DIMM.2 module.

This setup is expected to connect both existing 970 EVO+ via DIMM2 which has dedicated PCIx4 lanes on CPU. It was supposed to avoid DMI bottleneck for RAID performance.

Starting Configuration

|DMI bus

|-RAID 0 (SYSTEM, BOOTABLE)

|-Samsung 970 EVO Plus

|-Samsung 970 EVO Plus

|PCI Bus (Empty)

Configuration to be

|DMI bus (Empty)

|PCI Bus

|-RAID 0 (SYSTEM, BOOTABLE)

|-Samsung 970 EVO Plus

|-Samsung 970 EVO PlusTo achieve this i had to

- Physically remove the SDDs from under GPU and heatsink. GPU removal and heatsink removal was required

- Attach the SSDs to DIMM.2,

- Create RAID 0 from scratch while losing all data on old RAID 0. I had a backup of course

- Rollout backup to the new RAID

- Find out 970 EVO+ raid on DIMM.2 is not bootable

- undo all the steps

Later research revealed that only Intel SSDs can be bootable on PCI lanes. possibly even in RAID config, but this is still unclear to me. The only intel SSDs faster than 970 EVO+ are 900 series. The only 900 series with M.2 slot is 905p 380 GB. One piece was 500 USD which is expensive enough. I decided not to go for RAID but to to try seemingly exceptional random I/O performance feel of the 905p with a single drive.

Attempt 2. Going Optane

So Intel SSD it is. 500$ later, I could finally install new 905p 380 GB into my DIMM.2 slot. The layout plan looked like this:

Starting configuration

|DMI bus

|-RAID 0 (SYSTEM, BOOTABLE)

|-Samsung 970 EVO Plus

|-Samsung 970 EVO Plus

|PCI Bus

|- GPU (16x lanes)

Configuration to be

|DMI bus

|-RAID 0

|-Samsung 970 EVO Plus

|-Samsung 970 EVO Plus

|PCI Bus

|- GPU (8x lanes)

|- DIMM.2 (4x lanes)

|- Intel 905p (SYSTEM, BOOTABLE)

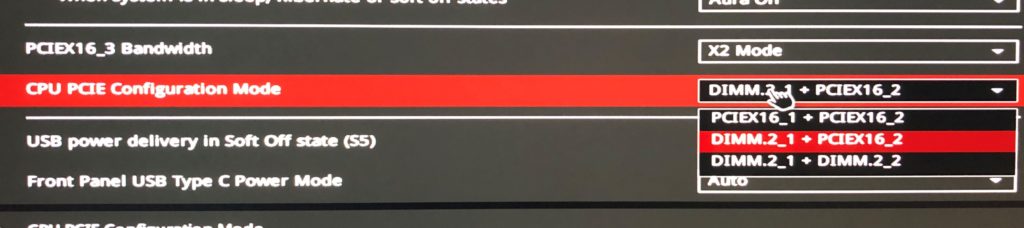

This time I didn’t need to move hardware around the motherboard. The installation required mounting SSD to DIMM.2 and attaching it to the motherboard. Simple. And a little BIOS adjustment to reroute CPU lines from GPU to DIMM.2.

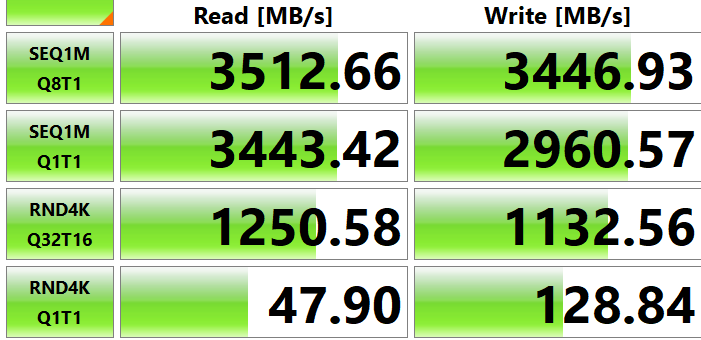

Software migration part was harder. I have copied my OS disk image with Acronis from RAID0 to the 905p. It didn’t boot at first, but booted after a couple of attempts, possibly installed some driver. To my unpleasant surprise, the 905p didn’t perform as per datasheet specs.

It was performing a lot slower! At this point i had no idea whether it was hardware or software issue. The device worked, but not as was as it was supposed to. I suspected it could be

- Wrong BIOS config

- Wrong driver/OS setting

- Wrong SSD place on motherboard (DIMM.2 instead of DMI slots) (OK, can put single 905p to DMI, it shouldn’t throttle the performance)

- Some exotic IRST Bottleneck, like inability to work on CPU lanes with z390 chipset or speed limitation when you still have your RAID0 running on DMI (PLEASE NOT THIS)

- Performance degrading after spectrum/meltdown hotfixes (NONONO)

BIOS config was pretty simple to check and rule out. in ROG motherboard the best you can do is to to designate 4 or 8 PCI lanes to DIMM.2. no other options. I tried both, got no improvement and moved on to Windows mysteries.

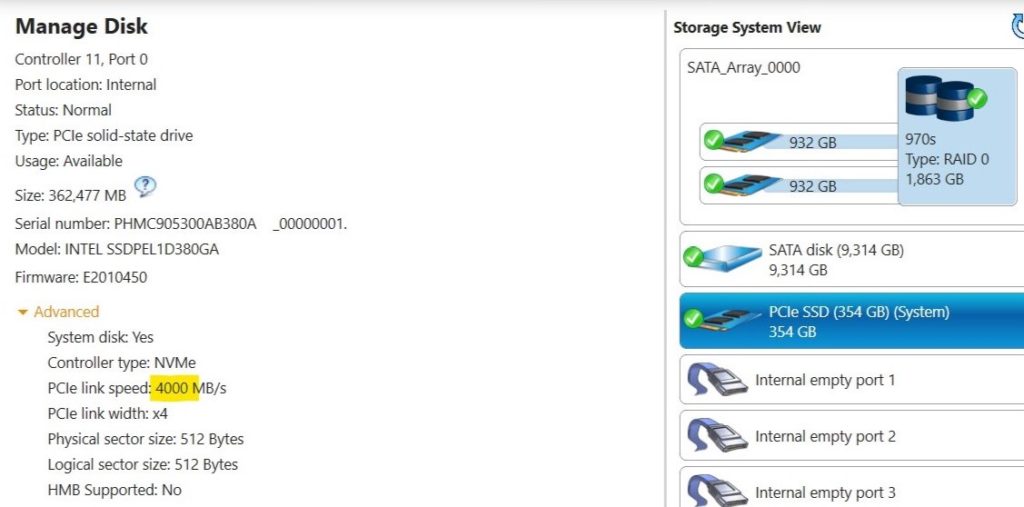

Since the performance wasn’t as per specs it seemed bottlenecked by something. I was really hard to tell by what exactly. Apart of tests, the only clue I had was IRST suite PCI speed. It showed PCIe link speed of 2000MB/s while it was expected to be 4000MB/s. I didn’t make a screenshot back then, but I have a screenshot only now with correct settings.

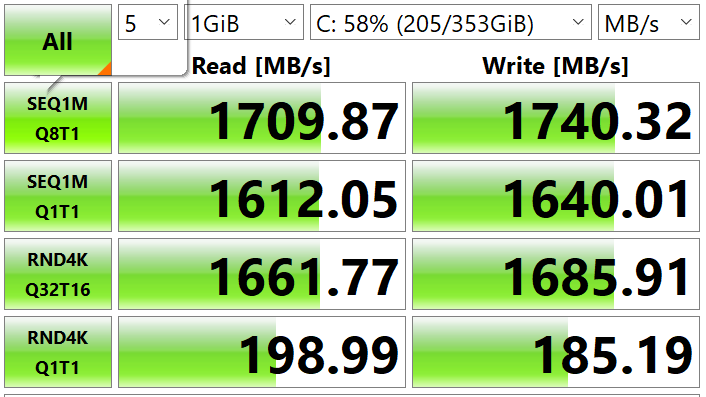

Finding the bottleneck and eliminating it wasn’t possible to me. I tried shuffling drivers around with no progress. Tried booting from fedora to check the performance out of windows with zero progress. I had limited options with driver shuffling since 905p was my OS disk. So I decided to reinstall windows from scratch. And it worked. Sadly, I couldn’t identify the root cause but now i know it was something related with moving OS sector-by-sector from RAID volume to single-drive volume which caused some unknown malfunction within windows. I’m happy it was assumption 2, not 3, 4 or 5. in this situation it would turn out to be close to impossible to fix.

Conclusion: you CAN have bootable SSD on CPU lanes with ASUS ROG Maximus XI Extreme with z390 chipset. Also, it’s likely supports RAID 0 on DIMM.2 on PCI lanes as when you are creating RAID in BIOS it allows you to add DIMM.2 drive to the drive pool. Maybe it can be made bootable RAID also, but hard to tell for sure. Motherboard documentation is really bad when it comes to describing this functionality. Same applies to Intel compatibility charts and any other datasheet i found. I know for sure it can be bootable. I know it can be part of RAID. Can it be both? go figure. I want to know but I don’t feel like spending extra 500$ for testing this.